I currently have a 1 TiB NVMe drive that has been hovering at 100 GiB left for the past couple months. I’ve kept it down by deleting a game every couple weeks, but I would like to play something sometime, and I’m running out of games to delete if I need more space.

That’s why I’ve been thinking about upgrading to a 2 TiB drive, but I just saw an interesting forum thread about LVM cache. The promise of having the storage capacity of an HDD with (usually) the speed of an SSD seems very appealing, but is it actually as good as it seems to be?

And if it is possible, which software should be used? LVM cache seems like a decent option, but I’ve seen people say it’s slow. bcache is also sometimes mentioned, but apparently that one can be unreliable at times.

Beyond that, what method should be used? The Arch Wiki page for bcache mentions several options. Some only seem to cache writes, while some aim to keep the HDD idle as long as possible.

Also, does anyone run a setup like this themselves?

For many games, the loading times are not thaaaat different when comparing HDD vs SSD vs NVME. (Depends on how impatient you are tbh.) And it barely affects FPS.

The biggest appeal of NVME/SSD for me is having a snappy OS.

So I would put your rarely played games on a cheap, big HDD and keep your OS and a couple of the most frequent games on the NVME. (In the Steam interface you can easily move the games to a new drive)

I find it to be a much simpler solution than setting up a multi tiered storage system.

Some sources:

https://www.legitreviews.com/game-load-time-benchmarking-shootout-six-ssds-one-hdd_204468

https://www.phoronix.com/review/linux-gaming-disk/3

https://www.pcgamer.com/anthem-load-times-tested-hdd-vs-ssd-vs-nvme/

Seconded, move the games to the NVME if you notice slow load times or textures not rendering quickly enough.

Thanks for citing sources

It actually performed decent until Apple gimped the SSD part to a mere 32 GB (down from 128 GB) in newer iMac models.

…depends what your use pattern is, but I doubt you’d enjoy it.

The problem is the cached data will be fast, but the uncached will, well, be on a hard drive.

If you have enough cached space to keep your OS and your used data on it, it’s great, but if you have enough disk space to keep your OS and used data on it, why are you doing this in the first place?

If you don’t have enough cache drive to keep your commonly used data on it, then it’s going to absolutely perform worse than just buying another SSD.

So I guess if this is ‘I keep my whole steam library installed, but only play 3 games at a time’ kinda usecase, it’ll probably work fine.

For everything else, eh, I probably wouldn’t.

Edit: a good usecase for this is more the ‘I have 800TB of data, but 99% of it is historical and the daily working set of it is just a couple hundred gigs’ on a NAS type thing.

I’m curious what type of workflow you have to utilise mainly the sane data consistently, I’m probably biased because I like to try software out - but I can’t imagine (outside office use) a loop that would remain this closed

It is mostly professional/office use where this make sense. I’ve implemented this (well, a similar thing that does the same thing) for clients that want versioning and compliance.

I’ve worked with/for a lot of places that keep everything because disks are cheap enough that they’ve decided it’s better to have a copy of every git version than not have one and need it some day.

Or places that have compliance reasons to have to keep copies of every email, document, spreadsheet, picture and so on. You’ll almost never touch “old” data, but you have to hold on to it for a decade somewhere.

It’s basically cold storage that can immediately pull the data into a fast cache if/when someone needs the older data, but otherwise it just sits there forever on a slow drive.

Back when SSDs were expensive and tiny they used to sell hybrid drives which were a normal sized HDD with a few gigs of SSD cache built in. Very similar to your proposal. When I upgraded from a HDD to a hybrid it was like getting a new computer, almost as good as a real SSD would have been.

I say go for it.

If it’s all Steam games then you could just move games around as needed, no need for a fancy automatic solution.

I used to run an HDD with an SSD cache. It’s deffo not as fast as a normal SSD. NVMe storage is also very cheap. You can get a 2tb NVMe for the same price as SATA.

In all honesty, I’d just keep things simple and go for a SSD.

How much storage do you need? If it’s just 2TB - or if you’re just future proofing it and don’t anticipate needing more than 2TB within the next few years - I’d pick one SSD. Even the cheaper ones will give you a better performance than an SSD + HDD combo.

Thanks for the advice, I’m probably going to need about 2-3 TiB. I guess I’ll just need to figure out a way to offload some more data to my server to keep it under 2 TiB.

Doing this with ZFS is called L2ARC and is very easy to set up.

L2ARC is not a read cache in the conventional sense, but something closer to swap for disks only. It is only effective if your ARC hit rate is really low from memory constraints, although I’m not sure how things stack up now with persistent L2ARC. ZFS does have special allocation devices, though, where metadata and optionally small blocks of data (which HDDs struggle with) can go, but you can lose data if these devices fail. There’s also the SLOG, where sync writes can go. It’s often useful to use something like optane drives for it.

Personally, I’d just keep separate drives. A lot of caching methods are afterthoughts (bcache is not really maintained as Kent is now working on bcachefs) or, like ZFS, are really complex are not true readback/writeback caches. In particular, LVM cache can, depending on its configuration, lead to data loss if a cache device is lost, and LVM itself can occur some overhead.

Flash is cheap. A 2TB NVMe drive is now roughly the cost of 2 AAA games (which is sad, really). OP should just buy a new drive.

L2ARC only does metadata out of the box. You have to tell it to do data & metadata. Plus for everything in L2ARC there has to be a memory page for it. So for that reason it’s better to max out your system memory before doing L2ARC.

It’s also not a cache in the way that LVMCACHE and BCACHE are.

At least that’s my understanding from having used it on storage servers and reading the documentation.

Any reason why you can’t buy a 2TB SSD and have both a 1TB and 2TB? I have another comment on this thread outlining the complexities of caching on Linux.

I don’t have an available slot for another NVMe, and I wanted to avoid SATA because the prices are too similar despite the performance difference.

Do you have any spare pcie slots, you can get m.2 pcie cards

There is 1 leftover slot, but it’s PCI Express 3.0 x1 lanes. That won’t be sufficient, right?

You can do it but I wouldn’t recommend it for your use-case.

Caching is nice but only if the data that you need is actually cached. In the real world, this is unfortunately not always the case:

- Data that you haven’t used it for a while may be evicted. If you need something infrequently, it’ll be extremely slow.

- The cache layer doesn’t know what is actually important to be cached and cannot make smart decisions; all it sees is IO operations on blocks. Therefore, not all data that is important to cache is actually cached. Block-level caching solutions may only store some data in the cache where they (with their extremely limited view) think it’s most beneficial. Bcache for instance skips the cache entirely if writing the data to the cache would be slower than the assumed speed of the backing storage and only caches IO operations below a certain size.

Having data that must be fast always stored on fast storage is the best.

Manually separating data that needs to be fast from data that doesn’t is almost always better than relying on dumb caching that cannot know what data is the most beneficial to put or keep in the cache.

This brings us to the question: What are those 900GiB you store on your 1TiB drive?

That would be quite a lot if you only used the machine for regular desktop purposes, so clearly you’re storing something else too.

You should look at that data and see what of it actually needs fast access speeds. If you store multimedia files (video, music, pictures etc.), those would be good candidates to instead store on a slower, more cost efficient storage medium.

You mentioned games which can be quite large these days. If you keep currently unplayed games around because you might play them again at some point in the future and don’t want to sit through a large download when that point comes, you could also simply create a new games library on the secondary drive and move currently not played but “cached” games into that library. If you need it accessible it’s right there immediately (albeit with slower loading times) and you can simply move the game back should you actively play it again.

You could even employ a hybrid approach where you carve out a small portion of your (then much emptier) fast storage to use for caching the slow storage. Just a few dozen GiB of SSD cache can make a huge difference in general HDD usability (e.g. browsing it) and 100-200G could accelerate a good bit of actual data too.

According to firelight I have 457 GiB in my home directory, 85 GiB of that is games, but I also have several virtual machines which take up about 100 GiB. The

/folder contains 38 GiB most of which is due to the nix store (15 GiB) and system libraries (/usris 22.5 GiB). I made a post about trying to figure out what was taking up storage 9 months ago. It’s probably time to try pruning docker again.EDIT:

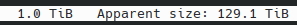

ncdusays I’ve stored 129.1 TiB lol

EDIT 2: docker and podman are using about 100 GiB of images.

I also have several virtual machines which take up about 100 GiB.

This would be the first thing I’d look into getting rid of.

Could these just be containers instead? What are they storing?

nix store (15 GiB)

How large is your (I assume home-manager) closure? If this is 2-3 generations worth, that sounds about right.

system libraries (

/usris 22.5 GiB).That’s extremely large. Like, 2x of what you’d expect a typical system to have.

You should have a look at what’s using all that space using your system package manager.

EDIT:

ncdusays I’ve stored 129.1 TiB lol

If you’re on btrfs and have a non-trivial subvolume setup, you can’t just let

ncduloose on the root subvolume. You need to take a more principled approach.For assessing your actual working size, you need to ignore snapshots for instance as those are mostly the same extents as your “working set”.

You need to keep in mind that snapshots do themselves take up space too though, depending on how much you’ve deleted or written since taking the snapshot.

btduis a great tool to analyse space usage of a non-trivial btrfs setup in a probabilistic fashion. It’s not available in many distros but you have Nix and we have it of course ;)Snapshots are the #1 most likely cause for your space usage woes. Any space usage that you cannot explain using your working set is probably caused by them.

Also: Are you using transparent compression? IME it can reduce space usage of data that is similar to typical Nix store contents by about half.

I was using this kind of a setup a long time ago with 120GB SSD and 1TB HDD. I’ve found the overall speedup pretty remarkable. It felt like a 1TB SSD most of the time. So, having a cache drive of around 10% of the main drive seems like a good size to cost compromise. Having a cache 50% size of the basic storage feels like a waste to me.

Currently have 2 1tb NVME’s over around 6 tb of HDDs, works really nice to keep a personal steam cache on the HDD’s in case I pick up an old game with friends, or want to play a large game but only use part of it (ie cod zombies).

Also is super helpful for shared filesystem’s (syncthing or NFS), as its able to support peripheral computers a lot more dynamically then I’d ever care to personally configure. (If thats unclear, I use it for a jellyfin server, crafty instance, some coding projects - things that see heavy use in bursts, but tend to have an attention lifespan).

Using bcachefs with backups myself, and after a couple months my biggest worry is the kernel drama more than the fs itself

I’ve had no problems with bcache. Been using it for ssd and a 10tb disk for years with btrfs.

if by cache you mean that your entire system is on your drive and that the hdd is for backups or games that you’re not currently playing but don’t want to reinstall, yes.

I used to do this all the time! So in terms of speed bcache is the fastest, but it’s not as well supported as lvm cache. IMHO lvm cache is plenty fast enough for most uses.

Is it going to be as fast as a NVME ssd? Nope. But it should be about as fast as a SATA ssd if not a little slower depending on how it’s getting the data. If you’re willing to take that trade off it’s worth it. Though anything already cached is going to be accessed at NVME speeds.

So it’s totally worth it if you need bigger storage but can’t afford the SSD. I would go bigger in your HDD though, if you can. Because unless you’re accessing more than the capacity of your SSD frequently; the caching will work extremely well for both reads and writes. So your steam games will feel like they’re on a SSD, most of the time, and everything else you do will “feel” snappy too.

Have you also tried using Bleachbit to free up storage space?

I haven’t, I’ll try that

EDIT: I’ve tried it and it had little effect (< 1 GiB)

Apple tried it a decade ago. It was called the Fusion Drive. It performed about as well as you’d expect. macOS saw the combined storage, but the hardware and OS managed the pair as a single unit.

If there’s a good tiered storage daemon on your OS of choice, go for it!