Hello, I’m currently thinking back and forth about which new home server to build. What I’ve stumbled across: the i9 and new Core 9 Ultra all only support a maximum of 192GB RAM. However, some of the mainboards support 256GB (with 4 RAM banks and dual channel). Why?

I want to have the option of maxing out the RAM later.

I could buy 4x48GB RAM now and be at 192GB. Maybe I would be annoyed later that 48 GB of RAM is still “missing”. But what if I buy 4x 64GB RAM? 3x64 GB RAM makes no sense, because then dual channel is not used. 4x64 is probably not recognized by the processor?

Or are there LGA1851 or LGA1700 processors, capable of handling 256GB RAM?

Because there’s no advantage to having this much RAM in an economy build. If you’re looking to max out your mainboard RAM then you’re looking for a thread ripper anyways, not some economy i9…

If you need that much ram, I would look at getting a AMD Threadripper. If you need DDR5, the 7960X is their “budget” option, or if you are fine with DDR4, there is lots of used 3000 and 5000 series for cheap.

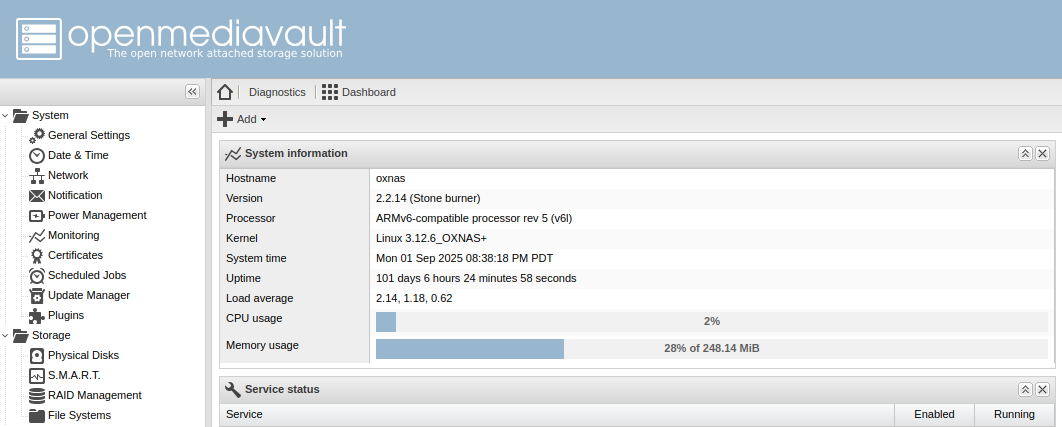

I personally believe you are overbuilding. For example my OpenMediaVault Samba Server and DLNA server runs on a SingleBoard that has 256 megabytes of RAM. Yes MB. And it still has RAM free without swap. And I should alter my clock.

Oh, I’m not using it for OMV and Samba. I’m using it for ollama/open webui with RAM instead of VRAM.

All current popular AI is meant to run on GPU. Why are you going to spend more money to run it on hardware for which it isn’t intended?

This isn’t really true — a lot of the newer MoE models run just fine on a CPU coupled with gobs of RAM. Yes, they won’t be quite as fast as a GPU, but getting 128GB+ of VRAM is out of reach of most people.

You can even run Deepseek R1 671b (Q8) on a Xeon or Epyc with 768GB+ of RAM, at 4-8 tokens/sec depending on configuration. A system supporting this would be at least an order of magnitude cheaper than a GPU setup to run the same thing.

What the heck are you self-hosting that anything beyond 64G is even taken into account?

If you’re really going to need that much RAM, start looking at servers with multiple sockets. They support absurd amounts of RAM in a single chassis. I think the biggest regularly-available servers have four sockets, but all but the most basic have two.

For clarification: it’s for a proxmox instance. I wanna use the ram for open webzine/ollama.

What is openwebzine? Can’t find any info on it.

sorry, fat fingers on tablet: I mean “open webui”.

maybe it was openwebui and the phone corrected it to openwebzine

But aside from buying a real truck instead of a typhoon, intels memory support might not be hard limit. It probaly is but it might not be.

More likely the mb’s memory controller can handle 256gb so if a new processor comes along with support for 256gb it will work.

Intel CPU RAM limits often are wrong for some reason. If a Mainboard coming with that CPU supports more, it’ll probably work. I usually try to search forums to see if someone uses the same configuration and how much RAM they got to work.

The real question is: why do you need this much memory?

If it’s not actually going to be used, you’re spending more money acquiring it now than you would later.

I’e seen that some want it to host their own LLM. It’s far cheaper to buy DDR5 memory than somehow getting 100+ GB of VRAM. Whether or not this is a good idea is another question

And 4 sticks ate 4 times more prone to break down.

Twice, because usually it’s two sticks.

In any case, RAM failure is rare enough that quadrupling its chances is not gonna make any meaningful difference. Even if it does, RAM is the easiest thing to replace in a PC. Don’t even need to go offline while waiting for a new stick. Someone who’s got the cash to build that thing in the first place won’t be too upset by the cost of another 32gb stick either, I don’t think.

I agree with CameronDev, not so much on the capacity, but the bandwidth. At 100+ Gb, the Ryzen/Core platforms are really holding you back with their weak I/O.

If you need that much memory, you might be better off picking up a used Xeon/Epyc from Ebay. Their CPU speeds are lower, but the quad channel RAM could make up for it, depending on what you’re trying to do.

I’d say this is the correct answer. If you’re actually using that much RAM, you probably want it connected to the processor with a wide (fast) bus. I rarely see people do it with desktop or gaming processors. It might be useful for some edge-cases, but usually you want an Epyc processor or something like that, or it’s way too much RAM that isn’t connected fast enough.

My edge case is: I wanna spin up an ai-lxc in proxmox. ollama and open webui. using RAM instead of vram. but it should low on power consumption on idle. thats why I want an intel i-9 oder core ultra 9 with maxed out RAM. it idles on low power, but can run bigger ai-models using RAM instead of VRAM. it would be not so fast like with GPUs, but thats OK.

I think a xeon would need more power…much more power in idle. I have an old Xeon E3-1275 v5, 32 GB RAM with a supermicro D3417-B mainboard and it idles about 10 Watts. this is fantastic, I but I don’t think I can get a good newer Xeon with low consumption like this. but I wanna send the old lady to retirement.He goes over the different ways to run a selfhost AI without a GPU, like you want to do, including maxing RAM and using PCI-e M.2 add-on boards.

Thank you very much! This leads to this article: https://forum.level1techs.com/t/deepseek-deep-dive-r1-at-home/225826/2 Maybe the 9959x is what I am looking for.

AI inference is memory-bound. So, memory bus width is the main bottleneck. I also do AI on an (old) CPU, but the CPU itself is mainly idle and waiting for the memory. I’d say it’ll likely be very slow, like waiting 10 minutes for a longer answer. I believe all the AI people use Apple silicon because of the unified memory and it’s bus width. Or some CPU with multiple memory channels. The CPU speed doesn’t really matter, you could choose a way slower one, because the actual multiplications aren’t what slows it down. But you seem to be doing the opposite, get a very fast processor with just 2 memory channels.

The i9-10900 has 4 channels (Quadro-Channel DDR4-2933 (PC4-23466, 93.9GB/s). would this be better in this way than an i9-14xxx (Dual-Channel DDR5-5600 (PC5-44800, 89.6GB/s))?

does the numbers (93 GB/s and 89GB/s) mean the speed for a RAM-stick or the speed all together? maybe an old i9-10xxx with 4channel-ram was better than a new dual-channel.

So if I had more memory channels it would be better to have say ollama use the cpu versus the gpu?

Well, the numbers I find on google are: a Nvidia 4090 can transfer 1008 GB/s. And a i9 does something like 90 GB/s. So you’d expect the CPU to be roughly 11 times slower than that GPU at fetching numbers from memory.

I think if you double the amount of DDR channels for your CPU, and if that also meant your transfer rate would double to 180 GB/s, you’d be roughly 6 times slower than the 4090. I’m not sure if it works exactly like that. But I’d guess so. And a larger model also means more numbers to transfer. So if you now also use your larger memory to use a 70B parameter model instead of an 12B parameter model (or whatever fits on a GPU), your tokens will come in at a 65th of the speed.

If i was considering one server with 256gb ram i would go for server hardware and not try to use consumer stuff.

256gb of ram seems well beyond standard self-hosting, what are you planning on running?!

OP wants to store all of their porn collection in RAM