I sometimes think that maybe as a society we’d be better off relaxing nudity taboos or something.

- 28 Posts

- 19 Comments

Yeah, it’s not an unreasonable connection on that information – Florida does also have palm trees – but I’m betting that it’s gonna be the other coast.

Florida is flat and pretty wet. It looks pretty lush compared to the Southwest.

kagis

Florida:

https://cdn.landsearch.com/listings/4CVJR/small/bell-fl-126924064.jpg

Here’s a shot from around Los Angeles out in California.

It’s dry enough that you won’t just have vegetation growing everywhere, and unless you’re gonna water it, anything that survives has to be able to tolerate little water. The planter there doesn’t have grass or anything.

I don’t think it’s Florida. Those are arid-land plants. I believe that that’s some kind of yucca in the planter in the foreground. That tree may be mesquite. The palm may be a California palm fan.

From the plants, I’d guess American Southwest somewhere, maybe southern California.

I think that the license plates are dark-on-light.

California would fit:

https://en.wikipedia.org/wiki/Vehicle_registration_plates_of_California

Arizona would fit:

https://en.wikipedia.org/wiki/Vehicle_registration_plates_of_Arizona

I don’t think it’s New Mexico:

https://en.wikipedia.org/wiki/Vehicle_registration_plates_of_New_Mexico

Or Nevada:

https://en.wikipedia.org/wiki/Vehicle_registration_plates_of_Nevada

Rural areas in the US tend to have more pickup trucks. I don’t see a bunch of trucks.

Walmart is a value store, tends to be more-common in less-wealthy areas.

The cars are older, which could also indicate a less-wealthy area, but might also be the age of the photo; I don’t know the age.

https://logo-timeline.fandom.com/wiki/Walmart

The Walmart logo is the 2008+ version, so the photo cannot predate 2008.

If I were gonna make a guess from that, I’d go with Los Angeles or San Diego or thereabouts.

EDIT: The image is a recent Google Street View shot, says copyright 2024 on the watermark. So those are gonna indeed be older cars.

38·18 days ago

38·18 days agoIf that email is actually from Logitech, it probably has some way to unsubscribe. Might have added you for some nonsense reason like a warranty registration, but I’ve never hit problems with a reputable company not providing a way to unsubscribe.

The random scam stuff…yeah, probably can’t do much about that.

One possibility I’ve wondered about is whether, someday, email shifts to a whitelist-based system. I mean, historically we’ve always let people be contacted as long as they know someone’s physical address or phone number or email address, and so databases of those have value – they become keys to reach people. But we could simply have some sort of easy way to authorize people and block everyone else. In a highly-connected world, that might be a more reasonable way to do things.

It’s been fine for me too. That said, I don’t eat there that often.

Plus, even if you manage to never, ever have a drive fail, accidentally delete something that you wanted to keep, inadvertently screw up a filesystem, crash into a corruption bug, have malware destroy stuff, make an error in writing it a script causing it to wipe data, just realize that an old version of something you overwrote was still something you wanted, or run into any of the other ways in which you could lose data…

You gain the peace of mind of knowing that your data isn’t a single point of failure away from being gone. I remember some pucker-inducing moments before I ran backups. Even aside from not losing data on a number of occasions, I could sleep a lot more comfortably on the times that weren’t those occasions.

I don’t know how viable it’d be to get a viable metric.

https://www.goldeneaglecoin.com/Guide/value-of-all-the-gold-in-the-world

Value of all of the gold in the world

$13,611,341,061,312.04

Based on the current gold spot price of $2,636.98

https://en.wikipedia.org/wiki/Jeff_Bezos

He is the second wealthiest person in the world, with a net worth of US$227 billion as of November 7, 2024, according to Forbes and Bloomberg Billionaires Index.[3]

So measured in gold at current prices, he’d have about one-sixtieth of the present global gold supply.

However:

It’s hard to know what the distribution of gold hoards are. One dragon might have an exceptionally large hoard.

It’s hard to know how many dragons we’re working with.

It’s likely that a world with dragons has a different value of gold.

That gold in our world isn’t actually accessible to Bezos at that price. If he tried buying that much, it’d drive up the price, reducing the effective percentage of the global share that he could afford to buy.

9·22 days ago

9·22 days agoI know that modern dryers often use a humidity sensor, and I can imagine that it’s maybe hard to project that.

But I don’t know what sort of sensors or dynamic wash time a washer would use. I thought that they were just timer-based.

kagis

Oh. Sounds like they use water level sensors and time to drain is a factor, so if the draining is really slow, that it’ll do that.

My clothes washer has had one minute left for the past 7 minutes. (i.redd.it)

Funny… Someone else had a similar issue a few days ago. This was my reply to them:

This sounds like a drainage issue. Not uncommon. I first learned of this on my previous washer several years ago.

The machine took a lot longer to drain than it should have, so what should’ve taken a minute or two, took 15.

A potential cause is that your drainage filter is clogged. Most people don’t even know they have one, much less how to clean it.

In MOST modern washers, it’s behind a small hatch on the front of the machine. (It may be located elsewhere, depending on your model.). Open the hatch, pull out a short hose, unplug the stopper on the hose to drain any excess water (into a small container of some sort). Then remove the filter…

The filter itself is typically a cylindrical piece that resides next to the hose. The filter may need to be unlocked somehow to remove it, but either way, once you slide it out you can clear it off of any buildup of hair, lint, and other gunk that’s collected on it.

Check your user manual (or Google) for your specific model.

If they have a display capable of it, might be a good idea for washers to suggest to the user that it’s draining slowly and that checking the filter might be in order.

14·22 days ago

14·22 days agoYou test fired a 3D printed gun you had no hand in making

I mean, I think that that’s reasonable. But that seems like a “get behind something protective and pull the trigger with a string” territory. Regardless of who printed it.

If there isn’t some kind of standard safety checklist for printed weapons, I really think that there should be if lots of people are going to be printing these things.

Relevant username.

Aww. Thanks for doing the research, though!

0·27 days ago

0·27 days agovenv nonsense

I mean, the fact that it isn’t more end-user invisible to me is annoying, and I wish that it could also include a version of Python, but I think that venv is pretty reasonable. It handles non-systemwide library versioning in what I’d call a reasonably straightforward way. Once you know how to do it, works the same way for each Python program.

Honestly, if there were just a frontend on venv that set up any missing environment and activated the venv, I’d be fine with it.

And I don’t do much Python development, so this isn’t from a “Python awesome” standpoint.

There has to be soy milk or almond milk or something else that could be used.

Definitely in scope for the community, though.

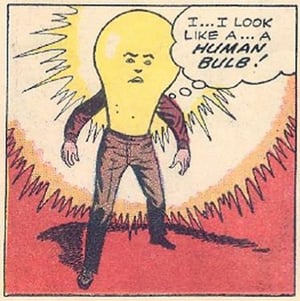

If Wonder Woman doing over-the-top BDSM qualifies, then there are some even more prime examples on /r/outofcontextcomics I see that I think I’ll submit.

Upon investigation, apparently there is an /r/outofcontextcomics on Reddit with years of submissions which can be sorted by top score and pillaged for comics.

2·1 month ago

2·1 month agoEH!T!!

It eventually became apparent that it wasn’t that generative AIs in 2024 had poor text-rendering skills, but simply that they had unfortunately included Golden Age comic books in their training corpus.

If you’re interested in home automation, I think that there’s a reasonable argument for running it on separate hardware. Not much by way of hardware requirements, but you don’t want to take it down, especially if it’s doing things like lighting control.

Same sort of idea for some data-logging systems, like weather stations or ADS-B receivers.

Other than that, though, I’d probably avoid running an extra system just because I have hardware. More power usage, heat, and maintenance.

EDIT: Maybe hook it up to a power management device, if you don’t have that set up, so that you can power-cycle your other hardware remotely.